In the fast-paced world of web development, scalability and reliability are paramount to the success of modern web applications. As businesses grow and user demands increase, applications must be able to handle surges in traffic and data load efficiently, without compromising on performance or user experience. This is where Docker and Kubernetes come into play, revolutionizing how developers build, deploy, and manage scalable and reliable web applications.

The Importance of Scalability and Reliability

Scalability ensures that a web application can handle increased loads gracefully by adding resources, either vertically (more powerful hardware) or horizontally (more instances of the application). Reliability, on the other hand, ensures that the application remains available and performs consistently under varying conditions. Together, these attributes are crucial for maintaining user satisfaction, trust, and engagement, especially in an era where users expect instant and uninterrupted access to digital services.

Docker: Revolutionizing Containerization

Docker has become synonymous with containerization, providing a platform for developers to package and distribute applications in containers. These containers encapsulate the application’s code, libraries, and dependencies in a standardized unit for software development, ensuring consistency across environments from development to production. Docker simplifies the deployment process, reduces conflicts between running applications, and enhances the manageability and portability of web applications, making it easier to scale and update without extensive overhead.

Kubernetes: Mastering Application Orchestration

Kubernetes, an open-source platform for automating the deployment, scaling, and management of containerized applications, works hand-in-hand with Docker to facilitate scalable web application development. It provides the orchestration and management capabilities needed to deploy containers at scale, manage application health, and automate operational tasks. With Kubernetes, developers can ensure their applications are resilient, distributed across multiple servers, and capable of handling real-time changes in traffic and workload.

Together, Docker and Kubernetes represent a powerful combination for building scalable and reliable web applications. They enable developers to create systems that are not only flexible and efficient but also aligned with modern cloud-native development practices. In the following sections, we will delve into the specifics of how Docker and Kubernetes can be used to build web applications that are robust, scalable, and easy to manage, ensuring they can meet the demands of today’s dynamic digital landscape.

Understanding Docker and Kubernetes

To build scalable web applications effectively, a solid understanding of Docker and Kubernetes is essential. These tools have revolutionized the approach to development, deployment, and management of web applications, offering solutions that enhance scalability, portability, and consistency.

Basics of Containerization with Docker

Docker is at the forefront of containerization technology, providing a platform for developers to encapsulate their applications and their dependencies into containers. This encapsulation ensures that the application can run in any environment that has Docker installed, solving the “it works on my machine” problem.

- How Docker Works:

Docker uses containerization to isolate applications in self-contained units, enabling them to run independently of the underlying infrastructure. This isolation reduces conflicts between applications and streamlines the development-to-deployment lifecycle. - Role in Developing Scalable Web Applications:

Docker simplifies the scaling process by allowing applications to be replicated across multiple containers easily. These containers can be deployed across different servers, leading to a highly scalable and distributed system. Docker’s lightweight nature also means that these containers require fewer resources than traditional virtual machines, enhancing efficiency.

Introduction to Kubernetes

Kubernetes is an open-source platform designed to automate the deployment, scaling, and operation of containerized applications. It builds on the containerization foundation laid by Docker, providing the tools needed to manage complex containerized application environments at scale.

- Purpose of Kubernetes:

Kubernetes aims to simplify the management of containerized applications across various environments. It provides features such as self-healing, automatic scaling, load balancing, and service discovery, making it easier for developers to deploy and manage their applications reliably and at scale. - Core Components:

- Pods: The smallest deployable units in Kubernetes, containing one or more containers that share the same context and resources.

- Nodes: Physical or virtual machines where Kubernetes runs containers.

- Services: Abstractions that define logical sets of pods and policies for accessing them.

- Deployments: Higher-level management entities that control pods, including their scaling and lifecycle.

- Orchestration of Containerized Applications:

Kubernetes orchestrates containerized applications by managing the lifecycle of containers, ensuring they are running as expected, scaling them up or down based on demand, and distributing them across nodes for load balancing and efficient resource use.

By leveraging Docker and Kubernetes, developers can create web applications that are not only scalable and reliable but also maintainable and flexible. Docker’s containerization capabilities ensure consistent and conflict-free deployment, while Kubernetes’ orchestration features automate and manage these containers at scale, providing a robust ecosystem for developing scalable web applications.

Setting Up the Development Environment

Creating a solid development environment with Docker and Kubernetes is crucial for building scalable web applications. Here’s how to set up Docker and Kubernetes in your local environment, as well as how to initiate a Kubernetes cluster, either locally or in the cloud.

Installing Docker

- Download Docker:

- Visit the Docker website and download Docker Desktop for your operating system (Windows, macOS, or Linux).

- Install Docker:

- Run the installer and follow the on-screen instructions to install Docker Desktop. Ensure you enable Kubernetes within the Docker Desktop installation, as it comes with a built-in Kubernetes cluster for development purposes.

- Verify Installation:

- Open a terminal or command prompt and type

docker --versionto verify that Docker is installed correctly.

- Run a Test Container:

- To ensure Docker is working properly, run a test container by executing

docker run hello-world. This command downloads a test image and runs it in a container, displaying a welcome message if successful.

Setting Up a Local Development Environment with Docker

- Create a Dockerfile:

- In your project directory, create a

Dockerfilethat defines how your application’s container image should be built. Specify the base image, working directory, dependencies, and the commands to run the application.

- Build Your Docker Image:

- Run

docker build -t your-app-name .to build a Docker image for your application based on the Dockerfile.

- Run Your Application in Docker:

- Use

docker run -p local-port:container-port your-app-nameto run your application inside a Docker container, accessible via the specified local port.

Introduction to Kubernetes Clusters

- Kubernetes clusters are sets of nodes managed by a control plane. They provide the environment to run and manage your containerized applications with Kubernetes.

Setting Up a Local or Cloud-Based Kubernetes Cluster

- Local Kubernetes Cluster:

- Docker Desktop includes a standalone Kubernetes server that runs on your local machine. Enable it through the Docker Desktop settings to start using Kubernetes immediately.

- Alternatively, tools like Minikube can create a local Kubernetes cluster for development and testing.

- Cloud-Based Kubernetes Cluster:

- Major cloud providers like AWS, Google Cloud, and Azure offer managed Kubernetes services (e.g., EKS, GKE, AKS). Use their consoles or CLI tools to create and configure a Kubernetes cluster.

- Follow the provider’s documentation to set up kubectl, the Kubernetes command-line tool, to interact with your cloud-based cluster.

- Verify Cluster Setup:

- Run

kubectl get nodesto verify that your Kubernetes cluster is set up and that you can communicate with it using kubectl.

Setting up Docker and Kubernetes correctly forms the foundation of your scalable web application development environment. Whether you’re working locally or in the cloud, these tools provide the infrastructure needed to build, deploy, and manage your applications effectively.

Containerizing a Web Application with Docker

Containerizing a web application with Docker involves creating a Dockerfile, building a container image, and running it locally to ensure everything works as expected. Here’s how to go about it along with some best practices for creating efficient and secure Docker images.

Creating a Dockerfile

- Initialize the Dockerfile:

- In the root directory of your web application, create a file named

Dockerfile(without any extension). - This file will contain the instructions for building the Docker image of your application.

- Define the Base Image:

- Start with a base image that matches your application’s environment. For instance, if you’re using Node.js, you might start with

FROM node:14.

- Set the Working Directory:

- Use the

WORKDIRinstruction to set the working directory inside the container, e.g.,WORKDIR /app.

- Copy Application Files:

- Use the

COPYinstruction to copy your application files into the container, e.g.,COPY . ..

- Install Dependencies:

- Run commands to install your application’s dependencies, such as

RUN npm installfor a Node.js app.

- Expose the Application Port:

- Use the

EXPOSEinstruction to inform Docker that the application listens on a specific port, e.g.,EXPOSE 3000.

- Define the Run Command:

- Use the

CMDinstruction to specify the command that runs your application, e.g.,CMD ["npm", "start"].

Building the Docker Container

- Build the Image:

- Open a terminal in your project directory and run

docker build -t app-name:version .to create a Docker image. Replaceapp-name:versionwith your application’s name and version.

- Run the Container:

- After building the image, run it in a container using

docker run -p host-port:container-port app-name:version. Matchhost-portandcontainer-portto your app’s needs.

- Test the Application:

- Ensure the application is running correctly in the Docker container by accessing it through a web browser or API client at

http://localhost:host-port.

Best Practices for Creating Docker Images

- Use Official Base Images:

- Always start with an official base image from Docker Hub to ensure you’re getting a secure and well-maintained image.

- Minimize Image Layers:

- Combine related commands into single

RUNstatements where possible to reduce the number of layers and the overall size of the image.

- Clean Up:

- Remove unnecessary files and dependencies after installation commands to keep the image size small.

- Avoid Storing Sensitive Data:

- Never store sensitive data like passwords or API keys in the Docker image. Use environment variables or secrets management tools instead.

- Use Tags:

- Tag your Docker images with specific versions to maintain a clear versioning history and facilitate rollback if needed.

- Regularly Update and Scan Images:

- Regularly update your Docker images to include the latest security patches. Use tools like Docker Scan to detect vulnerabilities within your images.

By following these steps and best practices, you can successfully containerize your web application with Docker, creating efficient and secure container images that are ready for deployment and scaling in any environment.

Deploying Web Applications on Kubernetes

Deploying web applications on Kubernetes involves understanding key Kubernetes objects and creating manifests that define how the application should run and be managed within the cluster. Here’s an overview of the essential components and how to deploy and manage web applications in Kubernetes.

Overview of Kubernetes Objects

- Pods:

- The smallest and simplest Kubernetes object. A pod represents a single instance of a running process in your cluster and can contain one or more containers.

- Deployments:

- Manage the deployment of Pods, ensuring that a specified number of Pod replicas are running at any given time. Deployments handle the rollout of updates and changes to your application.

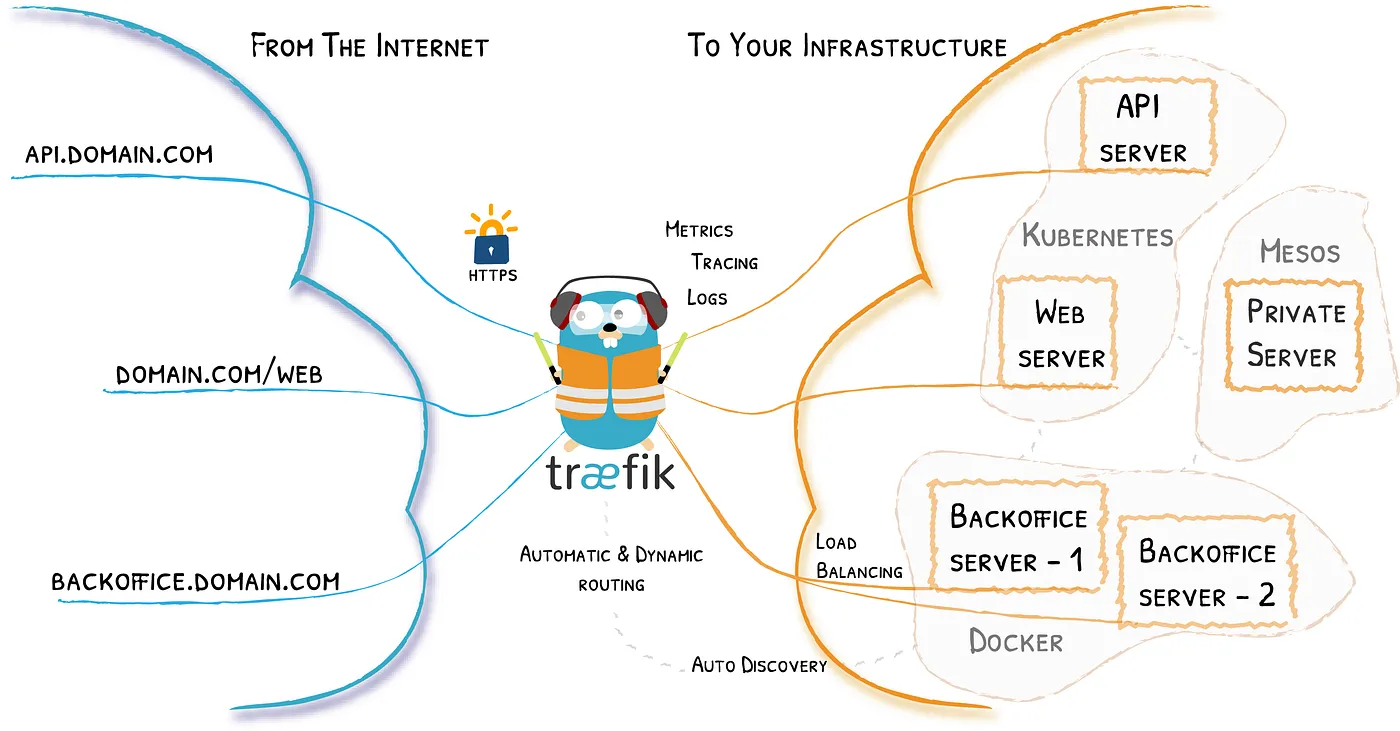

- Services:

- Provide stable endpoints for accessing the Pods. Services abstract the Pod IP addresses, making it easy to access the application without needing to track individual Pods.

- Ingress:

- Manage external access to the services in the cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting.

Creating Kubernetes Manifests

- Define Your Deployment:

- Create a YAML file (e.g.,

deployment.yaml) to define your application’s deployment, specifying the Docker image to use, the desired number of replicas, and configuration details.

- Define Your Service:

- Create a

service.yamlfile to define a Service that exposes your application Pods to other Pods within the cluster or externally.

- Define Your Ingress (if necessary):

- If you need to expose your Service to the outside world, create an

ingress.yamlfile to define Ingress rules for routing external traffic to your Service.

- Apply the Manifests:

- Use

kubectl apply -f deployment.yaml,kubectl apply -f service.yaml, andkubectl apply -f ingress.yamlto deploy your application components to the Kubernetes cluster.

Managing Application Updates and Rollbacks

- Updating Applications:

- To update an application, change the Docker image version in your

deployment.yamland apply the changes withkubectl apply -f deployment.yaml. - Kubernetes will perform a rolling update, replacing the old Pods with new ones under the updated configuration, ensuring no downtime.

- Rollback:

- If an update leads to errors or issues, Kubernetes allows for easy rollback to a previous deployment state using

kubectl rollout undo deployment/your-deployment.

- Scaling:

- Adjust the number of Pod replicas in your

deployment.yamlfile to scale the application up or down based on demand, and then apply the changes.

- Monitoring and Logging:

- Implement monitoring and logging to keep track of application performance and to quickly address any issues. Tools like Prometheus for monitoring and Elasticsearch for logging can be integrated with Kubernetes for this purpose.

By understanding and utilizing these Kubernetes objects and principles, you can effectively deploy, manage, and scale your web applications, taking full advantage of Kubernetes’ powerful orchestration capabilities.

Scaling and Managing the Application in Kubernetes

Kubernetes provides robust tools for scaling and managing web applications dynamically, ensuring they can handle varying loads efficiently and maintain optimal performance. Here’s how to manage scaling based on traffic and resource utilization and implement effective monitoring and logging.

Dynamic Scaling with Kubernetes

- Horizontal Pod Autoscaler (HPA):

- The HPA automatically adjusts the number of Pod replicas in a Deployment or ReplicaSet based on observed CPU utilization or other selected metrics.

- To set up HPA, use the command

kubectl autoscale deployment your-deployment --cpu-percent=50 --min=1 --max=10, where--cpu-percentis the target CPU utilization percentage,--minand--maxdefine the minimum and maximum number of Pods.

- Custom Metrics Scaling:

- Beyond CPU and memory usage, Kubernetes can scale applications based on custom metrics like HTTP requests per second. This requires integrating with metrics collection systems like Prometheus.

- Cluster Autoscaler:

- For environments running in the cloud, the Cluster Autoscaler automatically adjusts the size of the Kubernetes cluster when it detects insufficient or excessive resources, ensuring the cluster has the right number of nodes to handle the workload.

Monitoring and Logging

- Monitoring Tools:

- Tools like Prometheus, integrated with Grafana for visualization, are commonly used to monitor Kubernetes clusters. They collect and analyze metrics from Pods, nodes, and other Kubernetes objects to provide insight into the application’s performance.

- Logging:

- Fluentd, Elasticsearch, and Kibana (EFK stack) or Loki for more lightweight logging can be used to aggregate and analyze logs from all the Pods across the cluster. This helps in troubleshooting and understanding application behavior.

- Implementing Monitoring and Logging:

- Deploy these tools as part of your Kubernetes cluster. Use Helm charts or Kubernetes manifests to set up and configure monitoring and logging agents on each node or Pod.

- Analyzing Data:

- Regularly review the collected data to identify trends, detect anomalies, and understand the application’s performance and health. Use this information to make informed decisions about scaling and resource allocation.

Best Practices for Scaling and Management

- Proactive Monitoring:

- Set up alerts based on key performance indicators to proactively address issues before they impact users. Tools like Alertmanager (integrated with Prometheus) can send notifications based on defined rules.

- Logging at Different Levels:

- Implement logging at various levels (application, infrastructure, network) to get a comprehensive view of the system’s state and behavior.

- Regular Review and Adjustment:

- Periodically review the scaling policies and performance metrics to ensure they align with the current application needs and usage patterns.

- Performance Testing:

- Regularly conduct performance and stress testing to understand how your application behaves under different load conditions and to validate your scaling strategies.

By leveraging Kubernetes’ dynamic scaling capabilities and robust monitoring and logging tools, you can ensure that your web application remains responsive, efficient, and reliable, adapting seamlessly to changing user demands and system load conditions.

Advanced Topics in Kubernetes and Docker

Implementing continuous integration and continuous deployment (CI/CD) pipelines and utilizing advanced Kubernetes features are crucial for modern web application development. These practices ensure efficient, reliable, and scalable application deployment and management.

Implementing CI/CD Pipelines

- Overview of CI/CD:

- CI/CD automates the process of software delivery, from code updates to production deployment. CI (Continuous Integration) involves merging all developers’ working copies to a shared mainline several times a day, while CD (Continuous Deployment) automates the delivery of applications to selected infrastructure environments.

- Setting Up CI/CD for Containerized Applications:

- Use tools like Jenkins, GitLab CI/CD, or GitHub Actions to automate the testing and deployment of your Docker containerized applications.

- Define pipelines in configuration files within your repository that specify the steps for building, testing, and deploying the application.

- Integration with Docker and Kubernetes:

- Configure your CI/CD tool to build Docker images and push them to a Docker registry (like Docker Hub or a private registry).

- Use Kubernetes manifests or Helm charts in your CI/CD pipelines to deploy the Docker containers to Kubernetes clusters.

- Automated Testing and Quality Assurance:

- Implement automated testing within your CI pipeline to ensure that every change passes the necessary tests before it is deployed.

- Include stages for unit testing, integration testing, and end-to-end testing to validate application functionality and performance.

Utilizing Kubernetes Advanced Features

- Auto-Scaling:

- Beyond Horizontal Pod Autoscaler, consider Vertical Pod Autoscaler (VPA) and Cluster Autoscaler for managing resources more effectively. VPA adjusts the CPU and memory allocations for Pods, while Cluster Autoscaler adjusts the size of the cluster itself.

- Self-Healing Mechanisms:

- Kubernetes continuously monitors the state of Pods and can automatically restart containers that fail, replace Pods, reschedule Pods to healthy nodes, and prevent them from deployment if they don’t meet your predefined conditions.

- Service Discovery and Load Balancing:

- Kubernetes automatically assigns DNS names to containers and balances network traffic among containers based on these DNS names. This feature simplifies communication within the cluster and improves the distribution of processing tasks.

- Network Policies:

- Define Kubernetes network policies to control the flow of traffic between Pods and external services, enhancing the security and performance of your application.

- Secrets and Configuration Management:

- Use Kubernetes secrets to manage sensitive data like passwords, tokens, and keys securely. ConfigMaps allow you to separate configuration artifacts from image content to keep containerized applications portable.

Implementing advanced CI/CD pipelines and utilizing the full spectrum of Kubernetes features can significantly improve the efficiency, reliability, and scalability of web application development and deployment. These practices not only streamline the development process but also ensure that applications are robust, secure, and capable of meeting dynamic market demands.

Real-World Case Studies of Scalable Web Applications Using Docker and Kubernetes

Understanding how Docker and Kubernetes function in real-world scenarios can provide valuable insights into their capabilities and benefits. Here are some examples of successful implementations and the lessons learned from deploying and managing large-scale web applications.

Case Study 1: Online Retail Giant

- Background:

A leading online retail company migrated its services to Docker and Kubernetes to handle millions of transactions daily and support their global customer base. - Implementation:

They containerized their microservices using Docker and deployed them across a global Kubernetes cluster, allowing for seamless scaling and management. - Outcome:

The migration resulted in improved deployment speeds, reduced server costs by optimal resource utilization, and increased resilience during traffic spikes. - Lessons Learned:

- Infrastructure as Code (IaC): Adopting IaC practices for container orchestration helped in managing complex deployments and scaling operations efficiently.

- Microservices Scalability: Kubernetes excels in scaling microservices, demonstrating the ability to handle traffic dynamically and maintain system stability.

Case Study 2: Streaming Service Platform

- Background:

A popular streaming service needed a robust infrastructure to deliver content reliably to millions of users worldwide. - Implementation:

They used Docker to containerize their media processing services and Kubernetes to orchestrate these containers, implementing a multi-region deployment strategy. - Outcome:

The platform achieved high availability and could automatically adjust to the demand, maintaining optimal streaming quality even during peak usage. - Lessons Learned:

- Disaster Recovery: Effective use of Kubernetes’ regional clusters and replication features ensured disaster recovery and uninterrupted service.

- Performance Monitoring: Integrating comprehensive monitoring tools was crucial for real-time performance tracking and proactive issue resolution.

Case Study 3: Financial Services Application

- Background:

A financial services application required a secure and scalable environment to process sensitive customer data and transactions. - Implementation:

The application was deployed on a Kubernetes-managed cloud environment, utilizing Docker containers for each of the service components, ensuring secure and isolated processing. - Outcome:

They experienced improved operational efficiency, better security compliance, and the flexibility to scale services in response to market changes. - Lessons Learned:

- Security and Compliance: In highly regulated industries, Kubernetes and Docker facilitated compliance with stringent security requirements while maintaining scalability.

- Resource Management: Effective use of Kubernetes’ resource management features ensured that critical services had the necessary resources without overprovisioning.

These case studies highlight the transformative potential of Docker and Kubernetes in deploying scalable, efficient, and reliable web applications. They underscore the importance of strategic planning, the need for robust security and compliance measures, and the benefits of leveraging advanced container orchestration features to meet business objectives and customer needs.

Conclusion

In conclusion, Docker and Kubernetes have proven to be game-changers in the development and deployment of scalable web applications. Through real-world case studies, we’ve seen how these technologies enable organizations to handle massive scale, improve operational efficiencies, and maintain high levels of reliability and security.

The journey of containerization and orchestration with Docker and Kubernetes not only optimizes resource utilization but also fosters a more agile and responsive development environment. Organizations can deploy updates faster, manage services more effectively, and scale dynamically in response to real-time demand.

The key lessons from deploying large-scale web applications emphasize the importance of a well-thought-out infrastructure, the ability to adapt to changing needs, and the continuous monitoring and optimization of resources. Additionally, embracing a DevOps culture that integrates CI/CD pipelines with container orchestration can significantly streamline the development lifecycle.

As the technology landscape evolves, Docker and Kubernetes will continue to be pivotal in shaping the future of scalable web application development. Their ongoing development and the growing ecosystem around them offer exciting opportunities for innovation and improvement.

For organizations and developers looking to build resilient, efficient, and scalable web applications, Docker and Kubernetes represent essential tools in the modern software development toolkit. By leveraging these powerful technologies, developers can ensure that their applications are not only prepared to meet the demands of today but are also adaptable for the challenges of tomorrow.