Kubernetes is an open-source platform for automating tasks, deployment, scaling, and management of containerized applications. It offers a robust set of features for container orchestration, such as automatic scaling, self-healing, and rolling updates. As a result, Kubernetes has become the de facto standard for managing containers in production environments. Yet, as the number of applications and resources increases, managing a Kubernetes cluster can be intricate and demanding.

Kubernetes has swiftly become the benchmark for container orchestration, offering developers a potent toolkit for deploying and overseeing their applications. But the management of a Kubernetes cluster can become intricate and time-consuming with the growth in applications and resources. To streamline your workflow and sidestep potential issues, I’ve assembled a list of the top ten indispensable tools for Kubernetes engineers.

Overview

This article aims to guide readers on how to utilize these tools proficiently and to introduce best practices for their integration into a workflow. Adhering to these recommendations can help in dodging common pitfalls and in optimizing tool usage for goal achievement. Let’s dive in!

kubectl

kubectl is a command-line utility for managing Kubernetes resources. This tool offers an intuitive interface for cluster interaction, facilitating the creation, modification, and deletion of resources. Some frequent kubectl commands encompass deployment creation, replica scaling, and configuration updates. By mastering kubectl, one can expedite their workflow and efficiently tackle routine tasks.

To wield kubectl adeptly, one should grasp the foundational elements of its syntax and commands. The overarching syntax for kubectl commands is:

kubectl [command] [TYPE] [NAME] [flags]- command: The action intended for the resource(s), such as create, get, delete, apply, etc.

- TYPE: The category of Kubernetes resource under management, like pod, deployment, or service.

- NAME: The designated name of the managed resource.

- flags: Extra options or parameters accompanying the command.

When integrating kubectl into your workflow, consider the following best practices:

- Familiarize yourself with the rudiments of Kubernetes resource management. Prior to employing

kubectl, ensure you understand core Kubernetes concepts like pods, deployments, services, and namespaces. - Adopt descriptive nomenclature for resources. Assigning them purposeful and elucidative names facilitates their later identification.

- Deploy the

kubectl getanddescribecommands for resource inspection. They enable the observation of the present state and specifics of your resources, potentially assisting in issue identification and resolution. - For further insights, peruse the Kubernetes documentation on

kubectlusage conventions.

kubectx

kubectx serves as a tool for managing and toggling between kubectl contexts, essentially acting as a user-friendly conduit to the Kubernetes configuration file and facilitating context transitions.

Usage Instructions:

- Switch Contexts: Typing

kubectxsucceeded by the context name activates the said context. For instance,kubectx my-clustersets the active context to “my-cluster.” - List Contexts: Entering

kubectxwithout parameters enumerates all available contexts. - Rename Contexts: For effortless context identification, use the

=sign. As an example,kubectx new-name=old-namerenames “old-name” context to “new-name.” - Delete Contexts: To remove a context, employ the

-doption, followed by the context name.

kubens

kubens is a utility tailored for switching between Kubernetes namespaces within your prevailing context.

Usage Instructions:

- Switch Namespaces: Enter

kubensfollowed by the desired namespace name to activate it. For instance,kubens my-namespacedesignates “my-namespace” as active. - List Namespaces: Analogous to

kubectx, keying inkubenswithout any parameters unveils all namespaces within the current context.

Helm

Helm, a package manager tailored for Kubernetes, simplifies the deployment of applications on a Kubernetes cluster. It incorporates a templating engine, facilitating the definition and management of intricate applications. Helm also enables application installation, upgrading, and oversight via a singular command. Harnessing Helm can drastically reduce manual effort by automating deployment and application management tasks.

To optimize Helm usage, heed these suggestions:

- Comprehend chart structures: A chart is a directory housing essential configuration files and resources for application operation. Understanding this can aid in tailoring charts to specific requirements.

- Employ

Helm lintandtemplatecommands for validation: Helm furnishes commands that validate and test charts pre-installation. Their deployment can help identify discrepancies and ascertain chart compatibility with the Kubernetes cluster. - Integrate Helm with CI/CD pipelines: Incorporating Helm within CI/CD pipelines ensures consistent and reliable application deployment on Kubernetes clusters.

Prometheus

Prometheus, a monitoring and alert system, when configured through Helm, can accumulate a vast array of metrics from your cluster. It boasts a robust query language, facilitating data analysis and visualization. Employing Prometheus provides insights into cluster health, application performance tracking, and bottleneck identification. Its scalability, combined with a formidable query language and visualization tools, make it invaluable.

To maximize the efficacy of Prometheus, consider:

- Harnessing labels for adept queries: Labels in Prometheus classify and group metrics. Efficiently crafted queries can filter metrics based on attributes such as application type, environment, or version.

- Utilizing Prometheus’s alert manager for notifications: The alert manager tool, when based on Prometheus metrics, can trigger timely alerts about potential anomalies or cluster issues.

- Integrating Prometheus with Kubernetes service discovery: This ensures the automatic detection and monitoring of Kubernetes services and applications.

- Pairing Prometheus with other Kubernetes utilities like Istio, Kubeless, and Kubeflow can offer a panoramic view of both your Kubernetes cluster and its applications.

Grafana

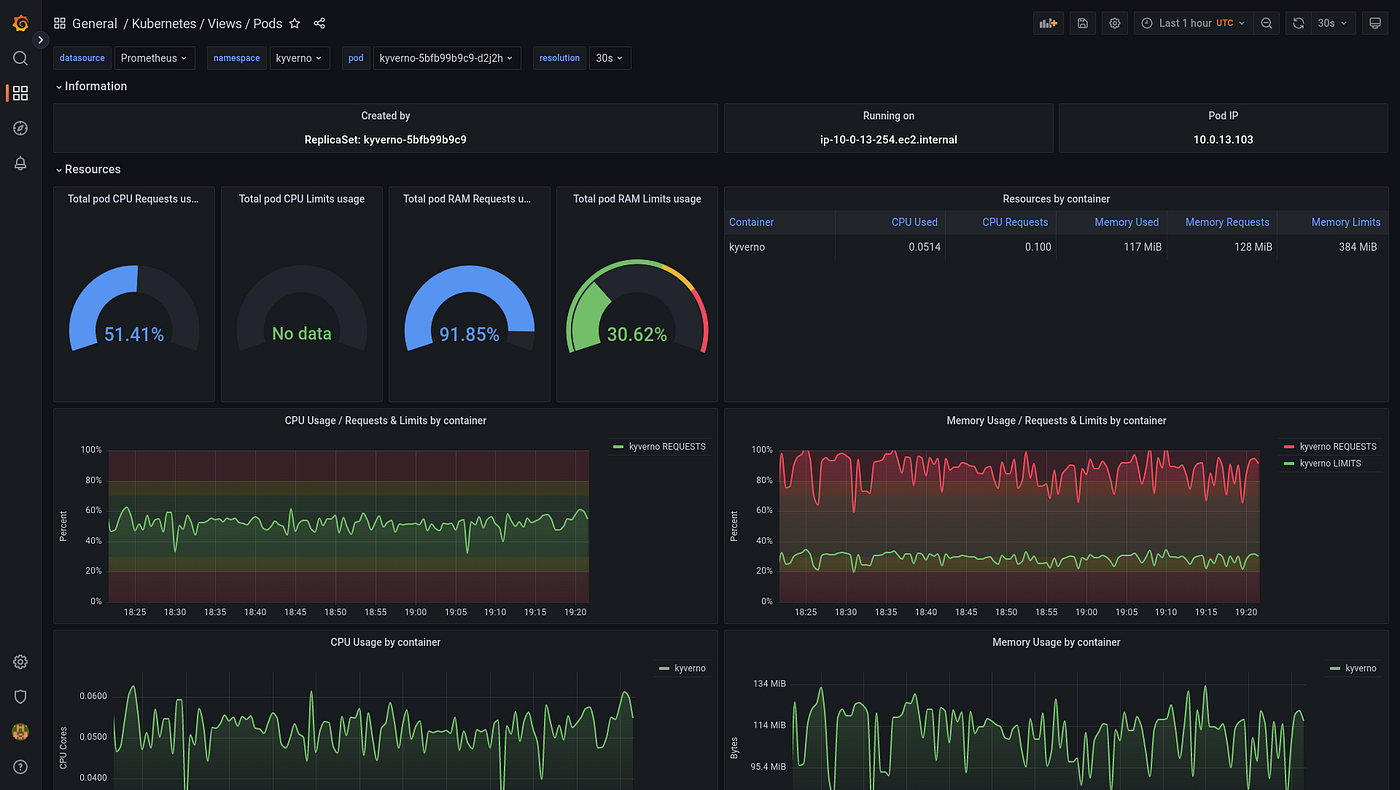

Grafana, a data visualization tool, facilitates the crafting of tailored dashboards and charts for Kubernetes metrics. Its sophisticated interface allows for custom alerts and notifications, resource monitoring, application performance tracking, and cluster health analysis. Utilizing Grafana can bestow valuable insights into Kubernetes resources, ensuring optimal performance.

Examples of Grafana Dashboards and Visualizations:

Grafana offers an array of pre-configured dashboards and visualizations for assorted data sources. Here are a few exemplary Grafana dashboards and visualizations tailored for Kubernetes:

- Kubernetes Node Dashboard: This dashboard imparts detailed insights about nodes within your Kubernetes cluster, featuring visualizations related to CPU usage, memory consumption, network traffic, and storage utilization.

2. Kubernetes Pod Dashboard: This dashboard offers comprehensive information about the pods active in your Kubernetes cluster. It features visualizations for CPU usage, memory usage, and network traffic.

3. Kubernetes Deployment Dashboard: This dashboard provides an in-depth view of the deployments in your Kubernetes cluster, including visualizations detailing deployment status, pod status, and resource utilization.

Istio

Istio is an open-source, supported service mesh platform tailored for managing communication between microservices-based applications. As Kubernetes clusters expand and become more intricate, managing and securing communication among various services becomes a challenge. This is where Istio comes into play, offering a layer of abstraction above the underlying infrastructure. This allows engineers to oversee their services with a broader perspective.

A key advantage of using Istio is its ability to simplify service mesh management. With Istio, engineers can effortlessly handle tasks like traffic routing, load balancing, and service-to-service authentication. This efficiency stems from Istio’s consistent and unified method for managing these functions across numerous services. Another merit of Istio is its robust traffic management capabilities. Engineers, for instance, can employ Istio to distribute traffic among multiple service versions, facilitating canary deployments or A/B testing. Istio’s advanced traffic routing features, including load balancing and circuit breaking, enhance the performance and dependability of microservices-driven applications.

Vault

Developed by HashiCorp, Vault is an instrumental tool for the secure storage and access of secrets, ranging from tokens and passwords to certificates and encryption keys. Within Kubernetes, Vault is frequently utilized to manage application secrets in a safe and regulated manner.

How to use:

- Install Vault: The installation process is environment-specific, but HashiCorp offers binaries for direct download and execution. Once Vault is installed, initiate a Vault server and communicate with it either via the Vault CLI or the HTTP API.

- Initialize Vault: Initially, Vault is in a sealed state. Before operations commence, it requires initialization, during which an encryption key is produced to safeguard data.

- Seal/Unseal Process: For encryption key protection, Vault is sealed — encrypted and unaware of data decryption methods. For its operation, the unseal keys, created during initialization, must be supplied to unseal Vault.

- Write, Read, and Delete Secrets: Post initialization and unsealing, Vault is ready to store and retrieve secrets. Commands like

vault kv put secret/hello foo=worldstore secrets, whilevault kv get secret/helloretrieves them.

Best practices:

- Safeguard your unseal keys, vital for Vault’s security. Distribute them among trusted organization members and ensure their safe storage.

- Activate Audit Logging to document every Vault interaction, assisting in identifying unauthorized access or security breaches.

- Implement Policies and Tokens. Policies define access permissions in Vault, while tokens serve as the primary authentication method.

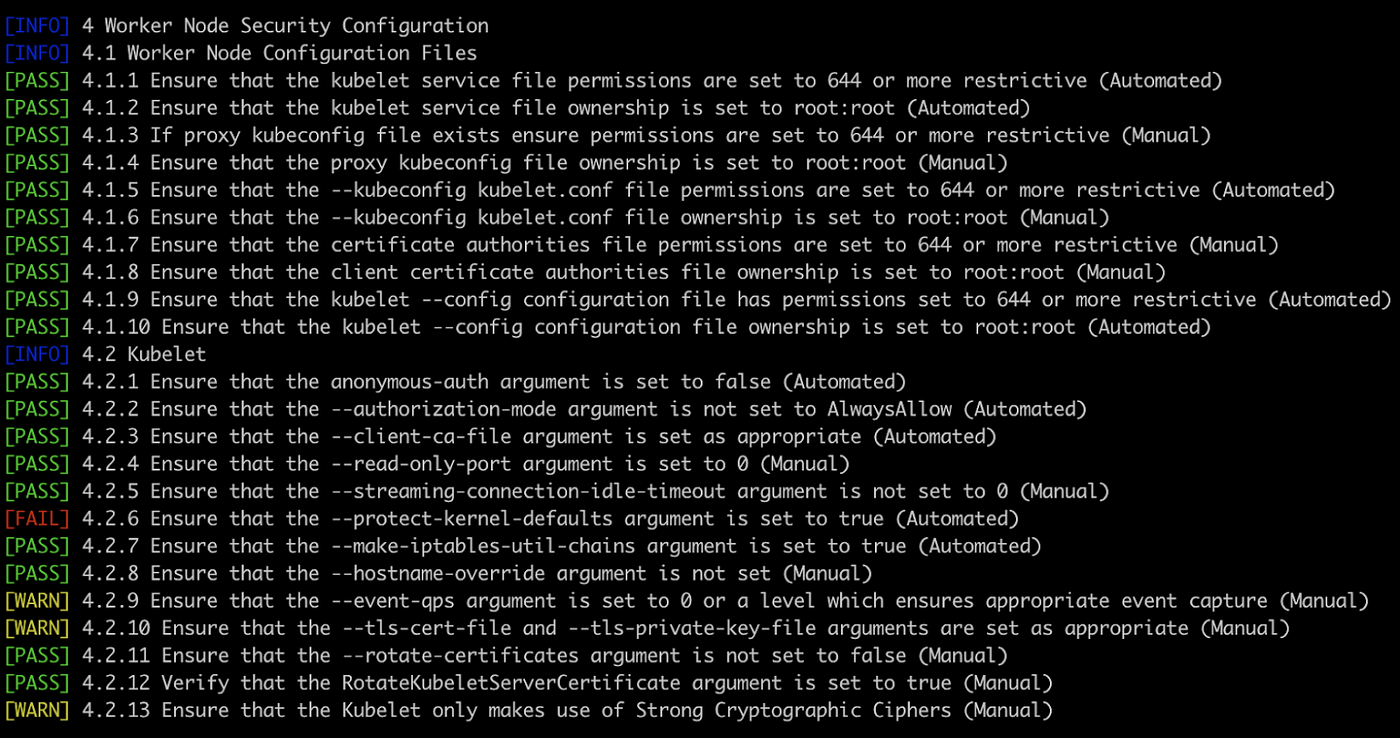

Kube-bench

Kube-bench assists Kubernetes engineers in assessing the security and compliance of their Kubernetes clusters with established industry practices. This open-source tool automates security checks for a Kubernetes cluster. Applicable to both master and worker nodes, Kube-bench furnishes a comprehensive report on the cluster’s security standing.

Among the diverse security evaluations Kube-bench conducts on a Kubernetes cluster are checks concerning authentication, authorization, network policies, and more. Examples of standard Kube-bench examinations include:

- Confirming the API server runs with the

--anonymous-auth=falseflag, ensuring the Kubernetes API isn’t accessible anonymously. - Checking that the kubelet operates with the

--rotate-certificatesflag for the rotation of TLS certificates, vital for secure communication. - Verifying the installation of the network policy controller, essential for enforcing the cluster’s network policies.

Kube-bench’s detailed report encompasses a summary of the cluster’s security health and specifics on any detected security discrepancies.

Kustomize

Kustomize is a popular open-source tool designed for customizing Kubernetes resources. It enables developers to manage configuration files for Kubernetes applications without altering the original YAML files manually. This approach streamlines the customization process, eliminating the need for multiple iterations of the same YAML files tailored for different environments.

One primary advantage of Kustomize is its capability to handle Kubernetes manifests as code. This functionality means developers can employ Git for version control of the configuration files, ensuring they are applied predictably and consistently. Such a feature becomes especially vital in large, intricate settings where several teams might be collaborating on a singular application.

For optimal use of Kustomize, adhering to best practices is essential. These include:

- Retaining the base configuration files in their simplest and most generic form, with specific settings tailored for environments placed in overlays.

- Employing patches for altering particular configuration segments instead of modifying the original YAML files.

- Utilizing version control for managing configuration files and maintaining a record of changes.

- Piloting configuration modifications in a non-production environment prior to a production deployment.

k9s

k9s is a terminal-based user interface designed for interacting with Kubernetes clusters. This tool seeks to simplify the processes of navigating, monitoring, and managing applications deployed within Kubernetes.

How to use:

- Install k9s: Installation procedures can differ based on your operating system. Typically, one would download the binary and incorporate it into their path. Post installation, execute k9s within your terminal.

- Navigate: After initiating k9s, navigation can be accomplished using arrow keys or vi-style commands. k9s presents your current context and namespace, facilitating easy transitions between namespaces.

- Observe Resources: k9s offers a live overview of your cluster resources, enabling monitoring of pod statuses, delving into individual pods, checking logs, and more.

Best practices:

- Utilize Contexts: For those overseeing multiple clusters, k9s provides effortless context switching. Opt for descriptive context names to minimize potential confusion.

- Adopt Aliases: Users can establish shortcuts for lengthier commands, an effective method to expedite workflow.

- Safeguard Your Session: Sessions in k9s can be password-protected, thwarting unauthorized access.

Kubeflow

Kubeflow, an open-source machine learning platform tailored for Kubernetes, eases the deployment and management of machine learning processes. The platform encompasses a diverse array of tools and services, assisting engineers in constructing, training, and deploying machine learning models within a Kubernetes cluster.

Recognized for its proficiency in deploying and managing containerized applications, Kubernetes, with Kubeflow, can now adeptly handle machine learning workflows. Kubeflow offers an integrated suite of tools, spanning data preprocessing to monitoring, which facilitates the development and deployment of machine learning applications.

Engineers aspiring to deploy machine learning models on Kubernetes clusters can leverage the myriad benefits offered by Kubeflow. Some noteworthy features include:

- Scalability: Kubeflow simplifies the upscaling of machine learning processes, catering to extensive datasets and intricate models. The platform taps into Kubernetes’s scalability to efficiently manage resources and optimize performance.

- Automation: Tasks integral to machine learning model development and deployment, including data preprocessing and training, are automated in Kubeflow. This efficiency allows engineers to concentrate on more sophisticated tasks.

- Portability: Kubeflow’s design emphasizes portability, ensuring machine learning models transition effortlessly across environments. This flexibility proves invaluable for entities operating their models across various cloud or on-premises ecosystems.

Conclusion

In essence, Kubernetes, while a robust and dynamic platform, presents several challenges for engineers orchestrating containerized applications. Thankfully, numerous tools, ranging from kubectl to Helm and Prometheus to Kubeflow, stand ready to assist Kubernetes users in refining their workflows and optimizing resource utilization. Each tool discussed in this piece brings unique advantages to the table for Kubernetes enthusiasts. Harnessing these tools adeptly and adhering to best practices will elevate the productivity of Kubernetes engineers, diminish errors, and ensure more efficient goal achievement. As Kubernetes’s prominence in contemporary software development burgeons, these tools will become increasingly pivotal for the success of Kubernetes-centric applications.