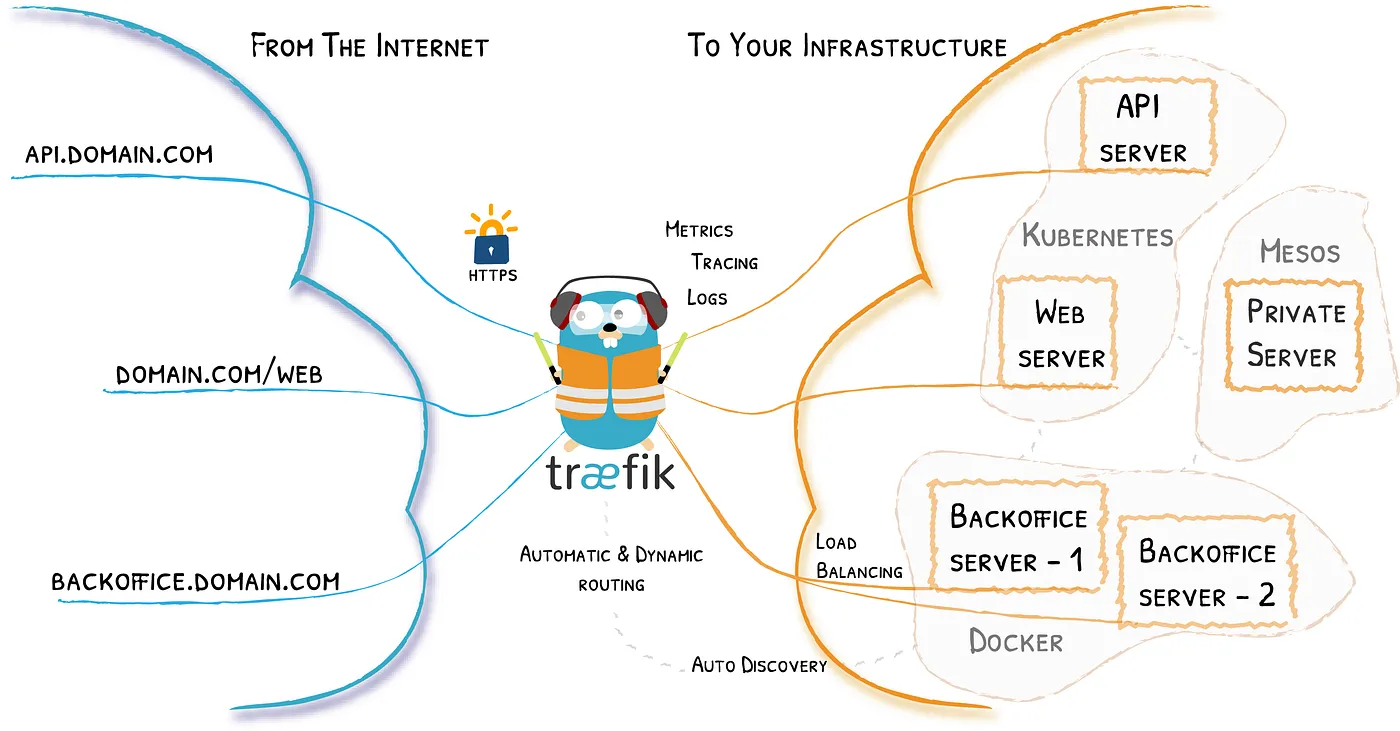

As web applications become more complex and traffic-intensive, choosing a high-performing reverse proxy server is critical for delivering fast and reliable content to users. Traefik v3 and Nginx are two widely used reverse proxy servers that offer robust HTTP performance capabilities. However, their approaches to handling HTTP requests and responses can vary significantly, resulting in differences in throughput, latency, and resource utilization.

In this article, I will compare the HTTP performance of Traefik v3 and Nginx, focusing on metrics such as request rate, response time, and resource throughput. I will also examine the factors that can impact the performance of each tool, such as their configuration options, load balancing algorithms, and caching mechanisms. By the end of this article, you should have a better understanding of the strengths and weaknesses of Traefik v3 and Nginx in terms of HTTP performance and be able to determine which tool is best suited for your specific use case.

Purpose

The purpose of this article is to compare the HTTP performance of Traefik v3 and Nginx, two popular reverse proxy servers used in modern cloud-native environments. While Traefik v3 is still in beta, the Traefik team claims that it delivers 20% better HTTP performance than its predecessor, Traefik v2. I aim to test this claim and provide an objective comparison of Traefik v3’s HTTP performance with that of Nginx. By conducting our own performance tests and sharing the results, we hope to help developers and DevOps teams make informed decisions when selecting a reverse proxy server for their applications.

Test Cases

To compare the HTTP performance of Traefik v3, Nginx, Traefik v2 and pure whoami I conducted a series of tests using wrk benchmarking tool. I used a sample API application (whoami/go) running on a single virtual machine with 16 vCPUs, 32 GB of RAM, Fedora operating system and Docker v23.0.2. The API application consisted of a simple REST API that returned a JSON response(1).

First, I tested the maximum request rate that each tool could handle while maintaining a stable response time. For Traefik v3, I used the default configuration with the RoundRobin load balancing algorithm and no caching. For Nginx, I used the default configuration with the load balancing algorithm and no caching. I recorded the achieved request rate and average response time.version: “3.9”

services:

traefik-v3.0:

image: "traefik:v3.0"

command:

- "--api.insecure=true"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:8000"

- "--serverstransport.maxidleconnsperhost=100000"

ports:

- "8000:8000"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock:ro"traefik-v2.9:

image: "traefik:v2.9"

command:

- "--api.insecure=true"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:8002"

- "--serverstransport.maxidleconnsperhost=100000"

ports:

- "8002:8002"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock:ro"nginx:

image: nginx:alpine

ports:

- "8001:8001"

volumes:

- "./nginx.conf:/etc/nginx/nginx.conf:ro"

- "./whoami.conf:/etc/nginx/conf.d/whoami.conf:ro"whoami-1:

image: "containous/whoami"

ports:

- "8003:80"

labels:

- "traefik.enable=true"

- "traefik.http.routers.go-benchmark.rule=Host(`benchmark.whoami`)"

- "traefik.http.services.go-benchmark.loadbalancer.server.port=80"whoami-2:

image: "containous/whoami"

labels:

- "traefik.enable=true"

- "traefik.http.routers.go-benchmark.rule=Host(`benchmark.whoami`)"

- "traefik.http.services.go-benchmark.loadbalancer.server.port=80"whoami-3:

image: "containous/whoami"

labels:

- "traefik.enable=true"

- "traefik.http.routers.go-benchmark.rule=Host(`benchmark.whoami`)"

- "traefik.http.services.go-benchmark.loadbalancer.server.port=80"whoami-4:

image: "containous/whoami"

labels:

- "traefik.enable=true"

- "traefik.http.routers.go-benchmark.rule=Host(`benchmark.whoami`)"

- "traefik.http.services.go-benchmark.loadbalancer.server.port=80"user nginx;

worker_processes auto;

worker_rlimit_nofile 200000;

pid /var/run/nginx.pid;events {

worker_connections 10000;

use epoll;

multi_accept on;

}http {

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 300;

keepalive_requests 10000;

types_hash_max_size 2048;open_file_cache max=200000 inactive=300s;

open_file_cache_valid 300s;

open_file_cache_min_uses 2;

open_file_cache_errors on;server_tokens off;

dav_methods off;include /etc/nginx/mime.types;

default_type application/octet-stream;access_log /var/log/nginx/access.log combined;

error_log /var/log/nginx/error.log warn;gzip off;

gzip_vary off;include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*.conf;

}upstream whoami {

server whoami-1:80;

server whoami-2:80;

server whoami-3:80;

server whoami-4:80;

keepalive 300;

}server {

listen 8001;

server_name benchmark.whoami;

access_log off;

error_log /dev/null crit;location / {

proxy_pass http://whoami;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port 80;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Server $server_addr;

proxy_set_header X-Real-IP $remote_addr;

}

}sysctl -w fs.file-max="9999999"

sysctl -w fs.nr_open="9999999"

sysctl -w net.core.netdev_max_backlog="4096"

sysctl -w net.core.rmem_max="16777216"

sysctl -w net.core.somaxconn="65535"

sysctl -w net.core.wmem_max="16777216"

sysctl -w net.ipv4.ip_local_port_range="1025 65535"

sysctl -w net.ipv4.tcp_fin_timeout="30"

sysctl -w net.ipv4.tcp_keepalive_time="30"

sysctl -w net.ipv4.tcp_max_syn_backlog="20480"

sysctl -w net.ipv4.tcp_max_tw_buckets="400000"

sysctl -w net.ipv4.tcp_no_metrics_save="1"

sysctl -w net.ipv4.tcp_syn_retries="2"

sysctl -w net.ipv4.tcp_synack_retries="2"

sysctl -w net.ipv4.tcp_tw_reuse="1"

sysctl -w vm.min_free_kbytes="65536"

sysctl -w vm.overcommit_memory="1"

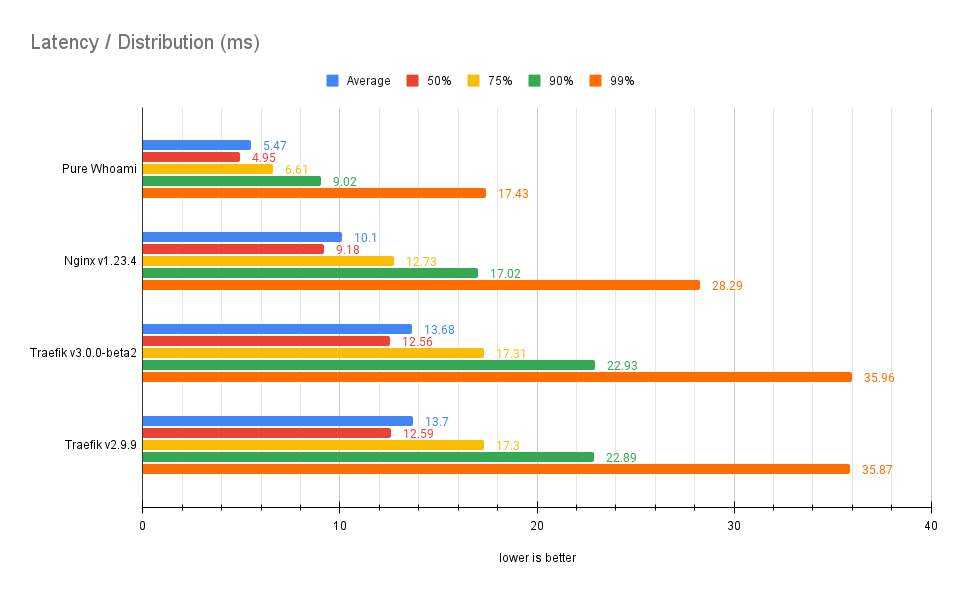

ulimit -n 9999999Result

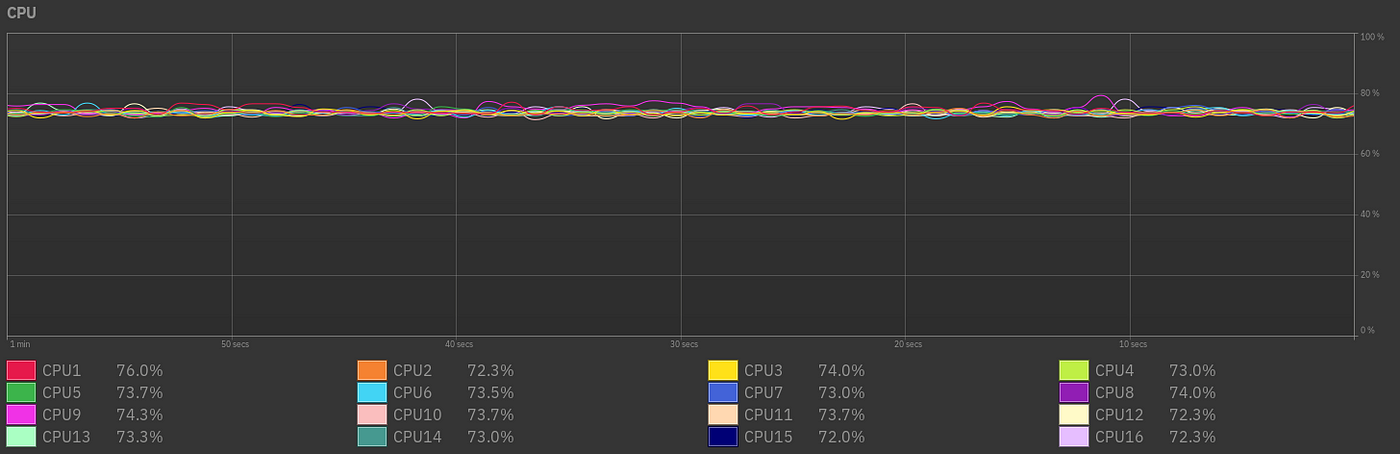

CPU Usage

RAW Result

Traefik v3.0.0-beta2

wrk -t20 -c1000 -d60s -H "Host: benchmark.whoami" --latency http://127.0.0.1:8000/bench

Running 1m test @ http://127.0.0.1:8000/bench

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 13.68ms 7.08ms 93.26ms 72.09%

Req/Sec 3.72k 312.65 10.22k 78.63%

Latency Distribution

50% 12.56ms

75% 17.31ms

90% 22.93ms

99% 35.96ms

4448396 requests in 1.00m, 432.72MB read

Requests/sec: 74019.30

Transfer/sec: 7.20MBNginx v1.23.4

wrk -t20 -c1000 -d60s -H "Host: benchmark.whoami" --latency http://127.0.0.1:8001/bench

Running 1m test @ http://127.0.0.1:8001/bench

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 10.10ms 5.46ms 64.40ms 73.77%

Req/Sec 5.06k 383.06 13.97k 73.18%

Latency Distribution

50% 9.18ms

75% 12.73ms

90% 17.02ms

99% 28.29ms

6047306 requests in 1.00m, 813.17MB read

Requests/sec: 100622.14

Transfer/sec: 13.53MBTraefik v2.9.9

wrk -t20 -c1000 -d60s -H "Host: benchmark.whoami" --latency http://127.0.0.1:8002/bench

Running 1m test @ http://127.0.0.1:8002/bench

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 13.70ms 7.04ms 91.19ms 72.17%

Req/Sec 3.71k 273.76 14.31k 75.23%

Latency Distribution

50% 12.59ms

75% 17.30ms

90% 22.89ms

99% 35.87ms

4438613 requests in 1.00m, 431.77MB read

Requests/sec: 73853.32

Transfer/sec: 7.18MBPure Whoami (w/o Proxy)

wrk -t20 -c1000 -d60s -H "Host: benchmark.whoami" --latency http://127.0.0.1:8003/bench

Running 1m test @ http://127.0.0.1:8003/bench

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 5.47ms 3.18ms 40.75ms 78.18%

Req/Sec 9.21k 746.51 29.25k 74.50%

Latency Distribution

50% 4.95ms

75% 6.61ms

90% 9.02ms

99% 17.43ms

11014279 requests in 1.00m, 1.29GB read

Requests/sec: 183259.27

Transfer/sec: 22.02MBConclusion

Performance tests comparing Nginx and Traefik v3 for HTTP request handling revealed interesting results. Nginx was able to achieve a higher request throughput rate than Traefik v3, with a performance of 100622 requests per second (rps) compared to Traefik v3’s 73960 rps. Traefik v3 demonstrated high latency performance, with an average response time of 13.39ms compared to Nginx’s 10.10ms. In terms of transfer speed, Nginx was able to handle a higher amount of data per second, with a transfer rate of 13.53MB/sec compared to Traefik v3’s 7.19MB/sec.

In conclusion, performance tests comparing Traefik v3 and Nginx for HTTP request handling have shown that Traefik v3 did not deliver the 20% improvement in HTTP performance over its predecessor, Traefik v2, as claimed by the Traefik team. In fact, results showed that Nginx outperformed Traefik v3 in terms of request throughput and latency. While Traefik v3 is still in beta and may undergo further improvements, tests suggest that it may not be the best choice for applications with high traffic volumes and demanding performance requirements.

Overall, the choice between Nginx and Traefik v3 for HTTP request handling depends on the specific requirements of your web application. If raw request throughput and low latency is the primary concern, Nginx may be the better option. Traefik v3’s dynamic configuration and automatic service discovery features make it more flexible and easier to integrate with modern container-based environments. Additionally, other factors such as ease of use, flexibility, and community support should also be considered when selecting a reverse proxy server. Ultimately, I recommend conducting your own performance tests and considering all relevant factors before making a final decision.